Bringing Thought Control to Any PC

June 2, 2017

Medical Research, Robotics & High-Tech

MIT Technology Review – For people who are paralyzed, a brain-computer interface is sometimes the only feasible way to communicate. The idea is that sensors monitor brain waves and the nerve signals that control facial expressions. Predefined signals can then be used to control the computer.

The minds behind MinDesktop: BGU alumni Ariel Rozen, Ofir Tam and Ori Ossmy

But there are significant problems with this kind of approach. The first is that these systems are highly susceptible to noise, so they often make mistakes. The second is that they are awkward and clunky to use, so communication is painfully slow. Typing speeds can be as low as about one character per minute.

A team from BGU’s Department of Software and Information Systems Engineering, including students Ori Ossmy, Ofir Tam and Ariel Rozen (who have since graduated), supervised by Dr. Rami Puzis, Prof. Lior Rokach, Dr. Ohad Inbar, and Prof. Yuval Elovici, have developed a general-purpose brain-computer interface called MinDesktop.

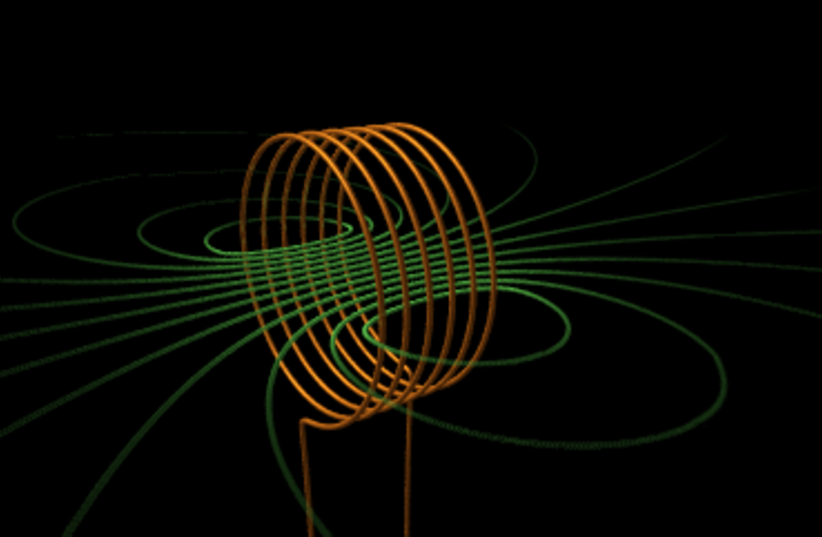

It allows users to control most aspects of a Windows PC using their thoughts, with typing speeds as fast as 20 seconds per character. The system works with the Emotiv EPOC+ neuro headset and a 14-channel EEG, taking signals detected by the headset and organizing them into a relatively easy-to-use interface.

Out of the box, the Emotiv headset can recognize the nerve signals associated with various facial expressions. But it can also be trained to spot the brain-wave patterns associated with thinking about, say, a favorite flower or song or pet.

Each of these thoughts can then be used to trigger a different action in the software. Indeed, the entire system is designed to work with just three inputs. It can be adapted to any form of input, provided it can distinguish three different ones.

The interface has some unique approaches. For example, users can select an item anywhere on the screen using a “hierarchical pointing device.” This divides the entire screen into four quarters, each in a different color.

Users select the quarter that contains the item of interest, and this quarter is itself divided into four smaller quarters, one of which the user selects, and so on. This division continues until one quarter is filled with the item of interest, which the user can click on by selecting it with their thoughts.

This allows the user to open or close any application. An onscreen keyboard, predictive text, and other shortcuts speed up the process of communication.

The BGU team tested the software by asking 17 adults to use it on a standard PC laptop and then measuring how long they took to perform certain tasks, such as opening a folder, playing a video, and searching the internet for a topic.

“The results indicate that users can quickly learn how to activate the new interface and efficiently use it to operate a PC,” says Ori Ossmy.

In just three learning sessions, all the users finished their tasks more quickly and were capable of sending a simple e-mail.